Senderscore

Thoughts on SenderScore

Kevin Senne posted over on the Oracle blog about how we need to stop caring about SenderScore and why it’s not as useful a metric as it used to be.

I can’t argue with anything he’s said. I think there is way too much focus on IP reputation and SenderScore. There’s so much more to deliverability than just one or two factors.

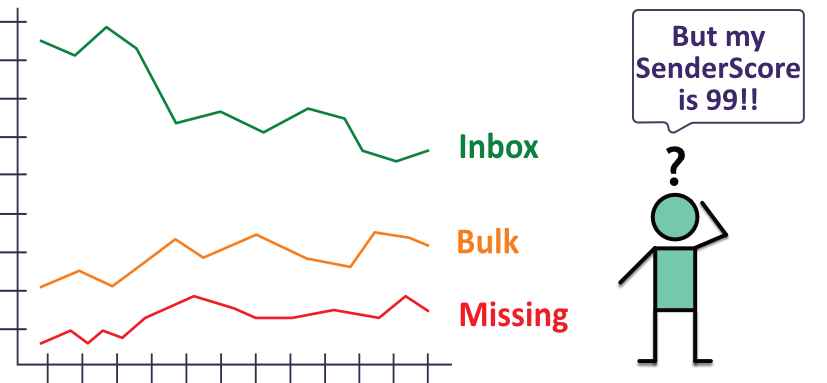

In fact, if you’ve been to any of my recent webinars or talks you will probably have seen some version of this image in my slides:

Basically, just because you have a great SenderScore doesn’t mean you’re going to have good delivery. Likewise, having a poor SenderScore doesn’t mean your mail is destined to be undelivered.

I tell clients, and people who ask about SenderScore that it reflects the data that Return Path gets, run through their proprietary algorithms to come up with a score. And that score is relevant for those ISPs that pay attention to it. But most ISPs make the deliver or not deliver decision based on their own internal data, not on the IPs SenderScore.

Reputation is more complex than a single number

I checked our SenderScore earlier this month, as quite a few people mentioned that they’d seen SenderScore changes – likely due to changed algorithms and new data sources.

It sure looks like something changed. Our SenderScore was, for a while, zero out of a hundred. That’s as bad as it’s possible to get. I didn’t get a screenshot of the zero score, but I grabbed this a couple of days later:

Are ReturnPath wrong? No. Given what I know about the traffic from our server (very low traffic, particularly to major consumer domains, and a negligible amount of unavoidable backscatter due to our forwarding role addresses for a non-profit to final recipients on AOL) that’s not an unreasonable rating. And I’m fairly sure that as they get their new algorithms dialed in, and get more history, it’ll get closer. (Though I’m a bit surprised that less than 60 mails a day is considered a moderate volume.)

But all our mail is delivered fine. I’ve seen none of my mail bounce. It’s very rare someone mentions that our mail has ended up in a bulk folder. I’ve received the replies I’ve expected from all the mail I’ve sent. Recipient ISPs don’t seem to see any problems with our mail stream.

A low reputation number doesn’t mean you actually have a problem, it’s just one data point. And a metric that’s geared to model one particular sort of sender (very high-volume senders, for example) isn’t going to be quite as useful in modeling very different senders. You need to understand where a particular measure is coming from, and use it in combination with all the other information you have rather than focusing solely on one particular number.

I do not think that means what you think it means

Yesterday, I looked at the analysis of ESP delivery done by Mr. Geake. Today we’ll look at some of his conclusions.

“Being blacklisted most likely suggests that sender IP either sends out to a great deal of unknown or angry recipients.” That’s not how most blocklists work. Most blocklists are driven by spam traps or by the personal mailboxes of the list maintainers. The only blocklist that took requests from the public was the old MAPS RBL, and I don’t believe that is the case any longer.

Blocking at ISPs is often a sign of sending out a lot of mail to unknown or angry / unengaged recipients. But most ISPs don’t make their lists public. Some allow anyone to look up IP addresses, and if we had the IPs we could check. But we don’t, so we can’t.

“[…] if you share this IP with Phones4U then only 62% of your emails will be accepted by a recipient’s email server. That’s before they hit the junk filter. I wouldn’t want to pay for that.” This conclusion relies on the Sender Score “accepted rate” number. Accepted Rate is a figure I don’t rely on for much. I’ve never been able to reconcile this number with what client logs tell me about accepted rate. For instance, I have one IP address that has a 4.4% acceptance rate. But I know that 19 out of 20 emails from this IP do not bounce. In fact, it’s rare to see any mail from this IP bounce.

The one thing that Mr. Geake gets right, in all of this, is that if you’re on a shared IP address with a poor sender, then you share that sender’s reputation. Their reputation can hurt your delivery.

But a dedicated IP isn’t always your best bet, either. Smaller senders may not have the volume or frequency required to develop and keep a good reputation on an static IP. In these cases, sharing an IP address with similar senders may actually increase delivery.

For some senders outsourcing the email expertise is a better use of resources than dedicating a person to managing email delivery. For other senders, bringing mail in house and investing in staff to manage email marketing is better.

Tomorrow: how do you really evaluate an ESP?

Twisting information around

One of my mailing lists was asking questions today about an increase in invitation mailings from Spotify. I’d heard about them recently, so I started digging through my mailbox to see if I’d received one of these invites. I hadn’t, but it clued me into a blog post from early this year that I hadn’t seen before.

Research: ESPs might get you blacklisted.

That article is full of FUD, and the author quite clearly doesn’t understand what the data he is relying on means. He also doesn’t provide us with enough information that we can repeat what he did.

But I think his take on the publicly available data is common. There are a lot of people who don’t quite understand what the public data means or how it is collected. We can use his post as a starting off point for understanding what publicly available data tells us.

The author chooses 7 different commercial mailers as his examples. He claims the data on these senders will let us evaluate ESPs, but these aren’t ESPs. At best they’re ESP customers, but we don’t know that for sure. He claims that shared IPs means shared reputation, which is true. But he doesn’t claim that these are shared IPs. In fact, I would bet my own reputation on Pizza Hut having dedicated IP addresses.

The author chooses 4 different publicly available reputation services to check the “marketing emails” against. I am assuming he means he checked the sending IP addresses because none of these services let you check emails.

He then claims these 4 measures

Reputation monitoring sites

There are a number of sites online that provide public information about reputation of an IP address or domain name.

Read MoreGmail and SenderScore

Return Path discusses that a high (>80) SenderScore is correlated with inbox delivery at Gmail.

Read MorePublic reputation data

IP based reputation is a measure of the quality of the mail coming from a particular IP address. Because of how reputation data is collected and evaluated it is difficult for third parties to provide a reputation score for a particular IP address. The data has to be collected in real time, or as close to real time as possible. Reputation is also very specific to the source of the data. I have seen cases where a client has a high reputation at one ISP and a low reputation at another.

All this means is that there are a limited number of public sources of reputation data. Some ISPs provide ways that senders can check reputation at that ISP. But if a sender wants to check a broader reputation across multiple ISPs where can they go?

There are multiple public sources of data that I use to check reputation of client IP addresses.

Blocklists provide negative reputation data for IP addresses and domain names. There are a wide range of blocklists with differing listing criteria and different levels of trust in the industry. Generally the more widely used a list the more accurate and relevant it is. Generally I check the Spamhaus lists and URIBL/SURBL when investigating a client. I find these lists are good sources for discovering real issues or problems.

For an overall view into the reputation of an IP address, both positive and negative, I check with senderbase.org provided by Ironport and senderscore.org provided by ReturnPath.

All reputation sources have limitations. The primary limitation is they are only as good as their source data, and their source data is kept confidential. Another major limitation is reputation sources are only as good as the reputation of the maintainer. If the maintainer doesn’t behave with integrity then there is no reason for me to trust their data.

I use a number of criteria to evaluate reputation providers.